As preparation for my upcoming series of interviews for Newsweek with the protagonists and practitioners of AI, I have been consuming a plethora of sources that examine the nature and origin of human intelligence and the intersection with machine intelligence in the past, present and (posited) future. I have been particularly struck by two books: Genesis, by Eric Schmidt, Craig Munie and Henry Kissinger (yes, that Henry Kissinger) that does an impressive job of outlining the potential risks and opportunities of AI employed in the essential domains of human society and industry and; A Brief History of Intelligence, by Max Bennett, which does an equally impressive job of identifying five evolutionary innovations that gave rise to, and underpin, human intelligence today. I highly recommend reading them in their entirety, but I will be leveraging some of their thinking and argumentation here and want to appropriately give credit where credit is due.

I want to start with Genesis (what better place to start?) as it concludes with an imperative that the one thing we must preserve above all else as we explore and exploit an AI-augmented future is human dignity.

Now I, like the majority of people, have a somewhat fuzzy sense of what exactly is meant by human dignity, and indeed this has been the subject of much debate over time, starting during the Age of Enlightenment. The philosopher Immanuel Kant argued that dignity was strongly coupled to the concept of morality and required human agency, or free will: the ability of humans to decide their own course of action. Other philosophers have since extended this definition to explicitly include the moral requirement to actively assist one another in achieving and maintaining a state of well-being, and also the intrinsic value of effort or "labor." These elements both tie back to my theory that humans intrinsically value "shared effort," and this is something we need to encapsulate and value going forward.

But, to make progress, I think we need to tie the concept of human dignity to something more concrete—something that is rooted in human cognition or intelligence, which are clearly the foundational capabilities from which any concept of dignity must emerge.

A good starting point is the observation by Richard Levy of the Paris Brain Institute in his recent exploration of the current state of understanding of the prefrontal cortex that "by enriching the mental space for deliberation and decoupling the immediate perception from the forthcoming action, we have become capable of creating additional degrees of freedom from our environment and our archaic impulsive behavior, co-feeding a source of imagination to represent alternative or new options (creativity) and therefore what philosophers name "free will."

In other words, "free will" is created within the prefrontal cortex.

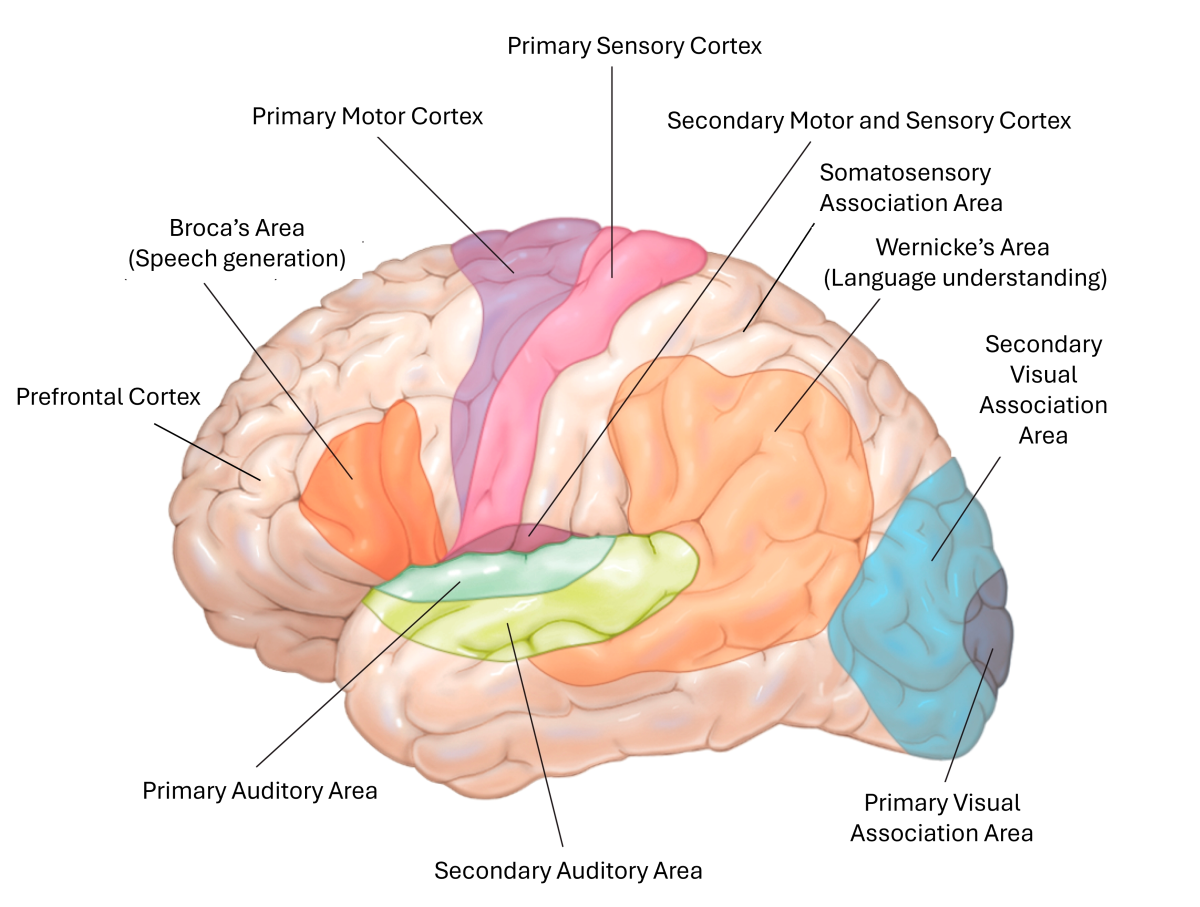

Notably, the prefrontal cortex and the sensorimotor cortex that comprise the neocortex are only present in mammalian brains, is considered the "epicenter of human intelligence," affording us the unparalleled ability to perform advanced reasoning based on one's physical and social environment, across time, and by analogy to past experiences or imagined future options.

The Development of Human Intelligence

But to understand the connection to artificial intelligence, it is useful to take a step back to understand how human intelligence actually developed, as there is a clear evolutionary logic that should inform how we also think about the development of human-compatible AI systems.

Max Bennett, in his aforementioned book on the origins of intelligence, posits that the brain evolved in five key stages:

The Steering Brain, developed in primitive creatures called bilaterians 500 million years ago, allowing sensing of food and predators and the decision to steer towards or away from the same

The Learning Brain, developed in early vertebrates, allowing reinforcement learning of good and bad outcomes by dopamine and serotonin-mediated self-association of actual experiences

The Simulating Brain, developed in early mammals, allowing the prediction of outcomes of potential experiences, inferred and extrapolated from one's own past actual experiences

The Mentalizing Brain, developed in early primates, allowing the prediction and understanding of others' intents and knowledge and learning by imitation, allowing extrapolation of other's experiences and skills to predict one's own future outcomes

The Communicating Brain, developed in early humans, allowing the efficient sharing of one's own actual experiences and one's own imagined actions and interactions with others

The first three phases of brain development are dependent on the 'old' cortex and the limbic system, whereas the latter two phases are entirely dependent on the development of the neocortex. There is considerable debate about the evolutionary pressure that gave rise to this 'new cortex', but there are two primary models: the social model and the ecological model.

The social brain theory states that the higher energy content provided by the fruit diet of tree-climbing primates manifestly increased the ability to support a larger brain, which could then be used for social politicking, the associated formation of stable groups and the attendant hierarchical rules required to prevent excessive, destructive competition. Alternatively, the ecological brain theory argues that it was the need to plan fruit-picking excursions to exploit the limited periods over which different fruits were ripe that gave the rise to the expansion of the neocortex, which was then repurposed for social politicking, as it leveraged the same neural structures. The prevailing consensus seems to be that a combination of the two is probably correct, and they drove the initial development of the primate neocortex.

A remarkable thing about the neocortex is that it is comprised of six layers of neurons that are the same throughout the entire neocortical structure, so it is an entirely plastic substrate onto which the whole array of higher order sensory, movement and cognitive functions can be built, So, how does it actually work? A straightforward way to understand the essential operation is comprised of three functional blocks that operate together:

◼ The agranular prefrontal cortex (aPFC), developed in the mammalian brain, which interfaces to the sensory cortex and the limbic system to build a generative model of oneself (a self-intent model) based on understanding the hardwired reflexes and learnt successes and failures of hormonally-driven primitive behaviors, in order to predict what might, and might not, be successful strategies and drive decision-making or 'intent'

◼ The primate sensory neocortex, developed in the primate brain, which interfaces to the mammalian sensory cortex to create a sense-based model of the physical world (a physical world model)

◼ The granular prefrontal cortex (gPFC), also developed in the primate brain, which interfaces to the aPFC and the primate sensory neocortex to build a higher order model (an integrated world model) that allows explanations of different intents — both one's own and those of others — what we would call "the mind."

I Can Read Your Mind: The Theory of Mind

The net result is that primates were able to leverage the neocortex to enable what psychologists call the Theory of Mind – the ability to project our own integrated world model onto others' in order to understand their intents and knowledge, and thereby engage in advanced social and group learning behaviors. And the more primates made use of this capability, the more scenario prediction and learning was possible, creating the opportunity for more adaptation to new situations and environments, and the consequent propagation of the species in a positive feedback loop.

A particularly appealing and useful operating model to describe the neocortex is that it implements a type of Helmholtz machine that comprises two primary sub-components: one that recognizes the real-world scenario (based on existing simulated reality) and one that generates new simulated realities for consideration.

The interplay of these two modes can be used to understand from where dreaming (either day- or night-dreaming) and imagination come; simply put, they arise from the generative model "gaining the upper hand" over the recognitive model, allowing our creativity and curiosity to explore new horizons and environments, rather than just continually exploiting what has already been experienced or is known. But it is critical to note that this exploration is rooted in the integrated world model that forms the foundational substrate of the primate neocortex, and so it reflects prospective reality in a grounded way. Clearly, this is not the case for today's AI systems, a point to which we will return after considering the one remaining "missing link."

So now we have a model for one level of advanced intelligence—primate intelligence—but what differentiates human intelligence? Simply put: Language.

One Theory of Mind known as Relational Frame Theory argues that the building block of human language and higher cognition is relating, i.e. the human ability to create bidirectional links between things and to share those linkages. It is thought that the inclination to spontaneously reference an object in the world as of interest and to likewise appreciate the directed attention of another, may be the underlying motive behind all human communication.

But we have already seen that the primate brain possesses Theory of Mind, so why didn't the great apes similarly develop language? Apes can communicate but only using emotional expression, which is tied to the limbic system (in particular, the amygdala) and brain stem. All mammals have this primitive expression center, but non-human mammals—even the largest primates—don't have a higher order communication system, so what created this unparalleled function in humans?

Talk is Not Cheap: The Development of Language

There is, as with any distant anthropological transition, no one theory as to the origin of this divergence between apes and humans, nor why a capability as valuable as language has not been replicated in another form elsewhere in the evolutionary tree. But there is general agreement that it must have required an improbable set of events in order for this 'singularity' to occur only once and not yet be replicated. Understanding the uniqueness of this confluence and the resultant significant role language plays in human cognition and creativity is key to understanding our reaction to—and ability to be deceived by—language-based AI systems such as LLMs.

To make progress on this front, here is a short summary of the evolution of the Homo species and the origin of language.

◼ About 2 million years ago, Homo erectus emerged and separated from the great apes due to tectonically-induced environmental change that produced the Great Rift Valley, with the forests to the west persisting and giving rise to chimpanzees, and the resultant savannah to the east giving rise to a new ecosystem of different mammalian and primate species. The need for Homo erectus to survive and thrive in this challenging predatory environment full of much larger apex predators (including sabre-toothed tigers, lions and, bizarrely, a lion-sized otter), created pressure that favored bipedalism (to move about and scout in grasslands), advanced tool-making and planning (for hunting), as well as upper-body development (for throwing weapons) and legs/lungs for endurance (for hunting over distances)

◼ The increase in brain size required to support new and enhanced capabilities such as bipedalism and advanced tool-making and planning, and the relative poor digestibility and therefore energy-conversion of uncooked meat (which comprised 80 percent of Homo erectus' diet), created pressure to find a more energy-rich solution, which resulted in the discovery and use of fire to cook food that resulted in greater nutritional value, with attendant ancillary benefits (for warmth or security)

◼ The resultant increase in supported brain size was again positively reinforced, resulting in greater ability to outwit and ensnare hunted prey, which in turn led to increased support for larger brain energy consumption. The net effect of this ever-increasing brain size created pressure for pre-mature birthing (in order for the brain/skull to fit through the birth canal of smaller-hipped bi-pedals), with the infant brain consequently required to grow massively after birth over a multi-year period (12 years in Homo sapiens). This, in turn, created parental pressure, which was eased by the rise of monogamy, closer ties to extended families and empathic and altruistic behavior towards the larger Homo group. It is from the resultant pressure to extend and expand group cooperation that language development seems to have arisen.

It is now believed that language originated with Homo erectus, with simple icon-based words derived from sensory experiences, before more abstract concepts emerged and Homo sapiens evolved the ability to connect different clusters of words into related concepts and therefore use metaphors for the first time. Language continued to evolve with a progressive increase in abstraction, on top of which we built narratives, religions and our sense of morality, which are a "grounded" means to restrict the excessive individualism that undermines the group cohesion that was the purpose of language in the first place.

It is interesting to contemplate that there is no new neo-cortical functional area that is associated with language, so all hominids could have developed language, but only Homo sapiens was subject to the perfect storm of pressures to create the underlying fabric that allowed the evolution of rich language development.

Note: It is believed that language is built on a hard-wired protocol of gestural and vocal turn-taking that evolved first in early humans. (Chimpanzee infants show no such behavior.) This, combined with the ability to support joint attention, as outlined above, allows the assignment of shared abstract labels to things, forming the basis of language which, with addition of a learned grammar, allows efficient utilization of these labels to form myriad statements, observations, concepts and queries.

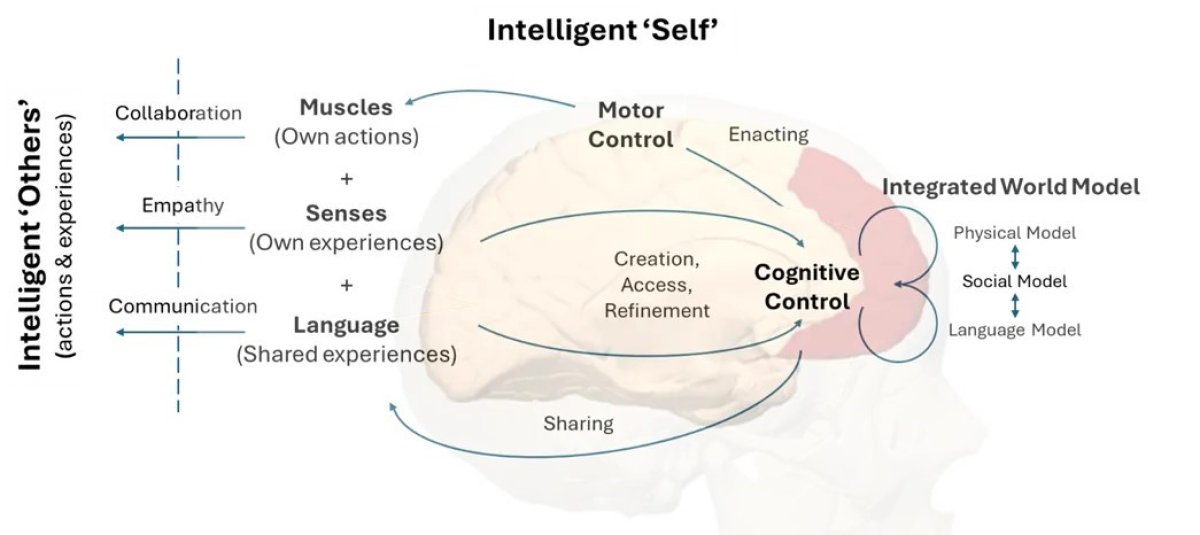

So, if we now bring everything together, we end up with the schema depicted in the figure below.

In short, the neocortex is truly the substrate for human intelligence and supports configurations that enable; physical world modeling; social interaction and understanding; and language modeling and utilization. These constituent models are created and refined by sensory and social experience and communication with others, allowing highly efficient teaching and learning.

Importantly, language plays a particularly critical role in accessing the integrated world model, as language is the means by which these models are transferred to, or shared, with others, and are also refined by discussion and learning from others. While it has been shown that cognition (and the associated analytical and thinking functions) do not require language to occur it has also been shown that cognition is enhanced or 'catalyzed' by the medium of language. Indeed, a neocortical center known as Wernicke's Area, which is associated with language understanding, is directly connected to a region associated with critical mentalizing skills.

In essence, language provides a unique portal to our internal world and is the conduit through which it is shared.

The Seduction of LLMs

So now we have the connection to our experience of LLMs and their manifest facility with language; we are unavoidably seduced by this ability and attribute human-like intelligence where there is none. The current set of LLMs are no more than stochastic parrots, in the words of Emily Bender, and do not have the intelligence of even a rat, let alone a cat or a human, as Yann LeCun has famously stated.

In many ways, this facile deception can be understood by recognizing that the language center in our own brains supports an 'auto-complete' function that allows rapid interaction and dialog that doesn't require any thought, so does not require involvement of the more sophisticated and effortful cognitive capabilities and physical world model. This is analogous to—and not unrelated to—the System 1 (unconscious and effortless) versus System 2 (conscious and effortful) behaviors identified by the behavioral economist, Daniel Kahneman, in his massively influential book, Thinking Fast and Slow. Simply put, System 1 can be regarded as turning inputs received from the individual's environment into symbols that either trigger an automatic reaction for rapid response, or are passed to System 2 for further cognitive analysis and planning, based on previously acquired background knowledge and new simulations for unknown or novel scenarios. This dual-mode operation allows us to minimize the energy consumed and also the latency of response to circumstances that require immediate reaction, but also allows for the engagement of our integrated world model whenever we experience something unfamiliar and unpredicted.

There is a note of caution that is worth highlighting about language. Since language is predicated on arbitrary symbols and labels for objects and concepts that are not well-grounded without a companion integrated world model, language usage is a risky business, as humans can be deceived by language as much as enabled by it. This deception mechanism is in play when we experience what we call a "hallucination" by LLMs.

Broadly, LLMs can therefore be thought of as follows: System 1-type language generation machines that are only pseudo-grounded in a virtual reality (derived from massive troves of web texts), that fool us into believing that they also possess a System 2 cognitive model. Yann LeCun makes the insightful analogy that these models are akin to students who learn by rote and develop no real understanding, versus those who do the hard work of creating mental models of concepts and knowledge bases.

Current AI systems also differ in another profoundly important way; they learn (are trained) only periodically, and do not adapt or expand their capabilities continuously. In contrast, human learning is continuous, not only in terms of knowledge acquisition but also the ability to trigger a new set of simulations of possible outcomes and the concomitant set of actions, in the presence of a new, uncertain scenario. A similar level of adaptability will be critical in order for any AI system to be of persistent value to any human task or process.

The Future Isn't Here...But It's Not Far Away

It should now be clear that any discussion of Artificial General Intelligence (AGI) is really hyperbolic conjecture amplified by the rise of maximum engagement click-bait algorithms. Yann LeCun has observed that the term 'general' is not even appropriate in the context of human intelligence, as human intelligence is actually quite specifically honed around us as an imaginative, expansive, socio-biological species living in the physical world. He proposes that Human-Level Intelligence (HLI) would be a more useful term to describe a system that can replicate human abilities. However, what we really want is a system that understands HLI, but extends beyond that, which some have recently termed "artificial super-intelligence." But, super connotes "above," i.e. superior, so I prefer to call this concept artificial ultra-intelligence (AUI) (ultra means "beyond"), and we, as humans, have always created machines that allowed us to go beyond our human limits, in speed, in perception, in distance, in power, in communication, in computation. So, now our goal must be to go beyond our cognitive power and create an AUI system that incorporates a recognizable level of HLI.

To understand the inevitability of this evolution, consider the following: Humans started with knowledge and inner model-sharing via kin relationships (family and extended family) and then larger, allied and aligned local social groups. The concomitant ability to imagine and create unexperienced realities allowed us to build compelling narratives (mythologies, religions, cultural customs etc.) which were used to bind disparate social groups together to create a common purpose that allowed unprecedented scale of cooperation, and the domination and elimination all other hominids over time. And when our collective knowledge and stories could not be held within the limits of a local set of human brains, we invented writing (and subsequently printing) to create an ultra-human repository of principles, theorems and knowledge that could be distributed and massively shared. The subsequent conversion from analog to digital assets and distribution has, in turn, led directly to the current internet-mediated digital communications age, which has recently been augmented by the availability of new computing systems that have allowed us to calculate previously incalculable things with speed and scale, and associated robotic systems (both software and hardware robots) that allow unprecedented levels of augmented analysis and execution.

This quest is inexorable and undeniable; we are now on the precipice of a new phase of human societal change, one that will release us from the constraints of human biological capacity (both individual and collective) and timescales. We will no longer be limited to 7 billion advanced intelligences that interact and compute in seconds, but only adapt on the timescale of years; we will be augmented by billions or even trillions of AUI systems that collectively compute new outcomes from myriad scenarios with unprecedented complexity in seconds. Of this much we can be sure, but there is a massive uncertainty regarding what level of HLI such systems must exhibit in order to be compatible with the array of different human intelligence(s), culture(s), and moralities. And, equally, how we instantiate these systems and ensure we can manage and control them in a manner that is compatible with human dignity.

If you have made it this far in this exploration of human intelligence and the human-machine symbiotic future, you deserve a payoff, so I will end by trying to outline what I think are essential components of any such human-AUI co-existence in the future age of Homo technicus, as it is termed in Genesis.

A Foundation for AUI

Building on the preceding discussion and the critical thinking of eminent others, I think future AUI systems must support:

1. Full transparency regarding its presence in any interaction, as well as its origins, influences, operation, validity and benevolence, as outlined here

2. Respect for human dignity in the largest sense of the word, including elevating or enabling free will, freedom of expression and valuation of effort applied both by individuals and groups, as I have described here

3. Predictable human control, adopting David Eagleman's postulate that the acceptable perimeter of the "self" is defined by what we can "predictably control," based on the brain's acceptance of physical and virtual systems (or 'limbs') that it can reliably control, and rejection of those it can't

4. An Integrated World Model that reflects and encapsulates human experience, with all the attendant physical, social and linguistic components, and supporting continuous learning and adaptation. This complex, cognitive System 2 should be combined with a simplistic, reactive System 1 for optimal efficiency and human-understandable explainability.

Of course, these things are easy to state but infinitely harder to realize. However, as many leading voices and initiatives have recently opined, it is critical that we define the boundary conditions and constraints of these systems now, in order to find a relatively smooth path towards our augmented future, rather than the dystopian alternatives. One piece of particularly notable work in this direction is the open paper by Yann LeCun and related technical publications on how we might build a system with a world model (or models) at its core, and in a way that has similarities to how the human brain functions, thereby allowing for the intriguing possibility that elements 1-3 in the above list may be possible to encode or encapsulate at the same time.

In the famous words of Looney Tunes, "That's all Folks" (for now). I look forward to continuing to share the evolution of this thinking as I exchange my own world models and learn from the brilliant minds of the interviewees in my coming Newsweek AI series. We will be exploring this question of what should comprise AUI, as well as where current AI systems are having a meaningful commercial or cultural impact, and how this could, and should, evolve over the coming months, years and beyond.

Acknowledgements

Along my journey of discovery there have been many insightful writings, and sources that have significantly influenced and informed my thinking. I have cited many of them throughout this article, but it would be remiss not to include a list here: A Brief History of Intelligence by Max Bennett; Genesis by Eric Schmidt, Craig Mundie and Henry Kissinger; Nexus by Yuval Noah Harari; AI Snake Oil by Arvind Narayanan and Sayash Kapoor; Superforecasting by Philip Tetlock and Dan Gardner; Revenge of the Tipping Point by Malcolm Gladwell; Thinking Fast and Slow by Daniel Kahneman; Inner Cosmos Podcast Series by David Eagleman; video dissertations by Yann LeCun and Rodney Brooks, as well as a number of stimulating exchanges with Stephen Fry.

A version of this article previously appeared on the author's Medium page.

About the writer

Marcus Weldon, a Newsweek senior contributing editor, is the former president of Bell Labs and a leader with the ability ... Read more